Most AI discussions with leadership teams focus on infrastructure: data, platforms, integrations, vendors. All of this matters. But in the 30–300 employee range, your real constraint is almost never technical.

It is cultural.

AI will not create your culture; it will expose it. And in many cases, break it open.

In the EU, the share of enterprises using AI technologies rose from about 8% in 2023 to 13.48% in 2024—a significant jump in just one year. OECD research shows that around 31% of SMEs already use generative AI directly, and many more rely on platforms where AI is embedded. The pressure to “do something with AI” is real, especially from boards and clients.

At the same time, European institutions and safety agencies are raising flags about AI-driven and algorithmic worker management, pointing to increased monitoring, work intensity, stress, and burnout risks if workers are not involved in design and governance.

This is the cultural fault line: speed vs trust.

The three cultural cracks AI exposes first

From my work with leaders, and supported by emerging research, three cultural tensions show up almost immediately when AI enters the organisation:

- Fear of replacement vs narrative of empowerment

People may publicly endorse AI while privately fearing loss of relevance. If this cannot be spoken, you will see passive resistance, quiet sabotage, or performative overwork. - Transparency vs opacity

When AI systems allocate tasks or influence decisions without clear explanation, trust erodes. People don’t need to understand the algorithmic math, but they do need to understand the logic of how it is used. - Blame vs shared responsibility

Under pressure, it becomes convenient to blame “the system” or “the tool” for decisions nobody wants to own. This weakens leadership authority and accountability at every level.

These are not technical problems. They are cultural patterns. And they already exist before AI arrives.

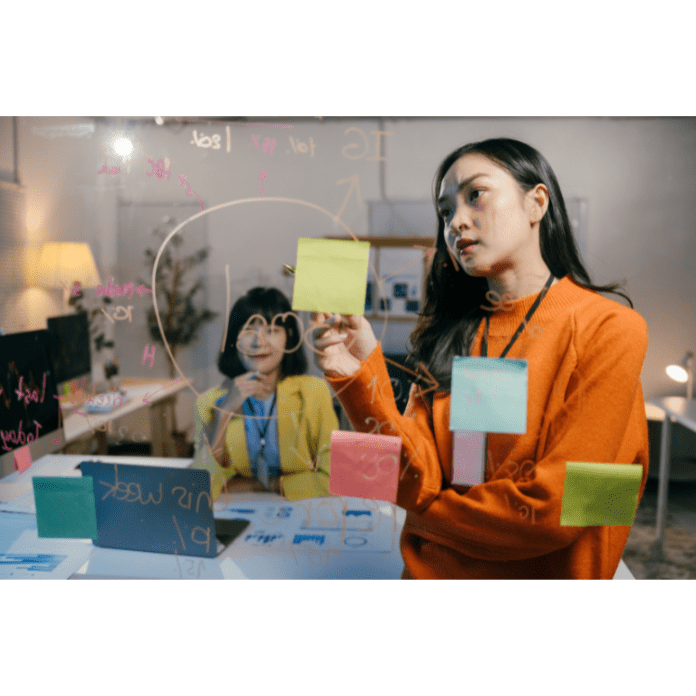

Behaviour mapping as cultural due diligence

Before scaling AI projects, SMEs should perform something like cultural due diligence: a systematic exploration of how people actually behave, decide, and relate across the organisation.

Behaviour mapping is one way to do this. It makes visible:

- How leadership power is perceived and responded to.

- Where conflict is allowed, and where it is silently disallowed.

- How information really travels (not how the process map says it should).

- Which emotional states dominate under pressure—fear, cynicism, resignation, or constructive urgency.

With this map, you can design AI adoption in a way that strengthens culture rather than destabilising it.

For example:

- If you discover high levels of learned helplessness (“nothing changes here”), you know that introducing AI as a top-down mandate will deepen disengagement. You’ll need participatory design and visible small wins.

- If you see strong silos, you’ll know that data-driven AI tools may reinforce those silos unless you intentionally create cross-functional rituals for interpretation and decision.

AI as a test of leadership maturity

The way you introduce AI will send a cultural signal more powerful than any slide deck.

If implementation is rushed, secretive, and solely cost-driven, people will conclude: “In this company, tools matter more than humans.”

If it is transparent, participatory and anchored in clear behavioural expectations, the signal is different: “In this company, we use technology to protect and enhance what is human.”

In practical terms, culture-by-design for AI-ready teams means:

- Clear principles: for example, “No major HR decision without a human conversation”, or “Metrics are a starting point for dialogue, not verdicts.”

- Shared language for behavioural patterns, so that talking about fear, resistance, or over-control is not taboo but part of daily leadership.

- Structured follow-up after AI launches: not just technical retrospectives, but emotional and relational ones. What changed in trust? In motivation? In perceived fairness?

Why this matters strategically

As AI tools become cheaper and more standardised, culture will be one of the few defensible differentiators. McKinsey and others can estimate trillions in potential value, but they all add the same caveat: value is only realised if organisations can adapt their ways of working accordingly.

For a 200-person company, the crucial question is:

- Will your best people stay and grow with you through this transition?

- Or will they leave for environments where they feel both technologically equipped and psychologically safe?

You cannot buy that with another platform. You build it by understanding, in detail, the behavioural architecture of your teams and making deliberate cultural choices around AI.

So before your next AI investment, consider this sequence:

- Map your culture behaviourally—not in values statements, but in observable patterns.

- Name the cracks AI is likely to expose.

- Design AI adoption as a vehicle to repair and strengthen those areas, rather than bypass them.

AI will transform your systems. But it is your culture—by accident or by design—that will determine whether that transformation becomes a competitive advantage, or just another wave of disruption your people have to survive.